Introduction

Serverless Guru has organized a Serverless Holiday Hackathon where participants compete during the first half of December 2023 to see who can develop the best holiday-themed chat application that uses any LLM.

As part of the Hackathon organization, we developed a demo app to showcase to participants how one could build a relatively simple chat application where the LLM acts like Santa.

This article is meant to aid any participant who might be currently stuck with their project while also acting as an example of the article they need to write as part of the project submission.

Our Demo will be live until the end of December 2023 and can be found under the following link: https://santa.serverlessguru.com/

Demo Video

Some Loom videos have been recorded to showcase the demo application and to provide more context before we do a deep dive into the actual Back-End architecture and development process.

FE Example

The following video provides a quick introduction to the application, its capabilities, and how one could use it from the Front-End app.

]API Example (Postman)

Another video has been recorded of the actual WebSocket API behaviour to provide more context into the actual workings of the developed API.

]Challenges Faced During Development

As part of this section, and before showcasing the full solution, we want to introduce some of the challenges we faced during the development and how we resolved them.

Connecting to an LLM

The main requirement of the Hackathon is that the app has to be holiday-themed and take advantage of the use of an LLM.

Conversation Chain and Memory

We introduced the usage of the Langchain JS framework to simplify the implementation of a Conversation Chain and the storage of the Conversation History.

Enabling this allows the model to have access to the context of the full conversation and generate more context-aware and valuable responses.

Getting Streamed Responses

As the cherry on top, we also wanted to implement streamed responses to the user.

This would allow us to generate a more interactive experience on the Front End by completing the response dynamically.

To be able to receive streamed responses and use a conversation chain, we needed to implement a callback on Langchain.

Here is a simplified implementation example:

await chain.call({ input: userInput }, [

{

async handleLLMNewToken(token) {

streamedResponse += token;

console.log(streamedResponse);

},

},

]);

The full code snippet can be found in the following repository.

Avoiding Timeouts - WebSocket API

As mentioned during our Webinar Building Your First Serverless API with Langchain one of the challenges developers will face is the execution time taken by the LLMs to process some prompts.

During the Webinar, we presented different options, such as AppSync Subscriptions and WebSocket APIs.

In this case, we decided to develop a WebSocket API, which resolved the need for bi-directional communication but introduced some additional challenges.

Decoupling long-lasting execution

Similar to any other API, a WebSocket API still has a 30-second timeout for the Back End Resources to confirm a message was received correctly.

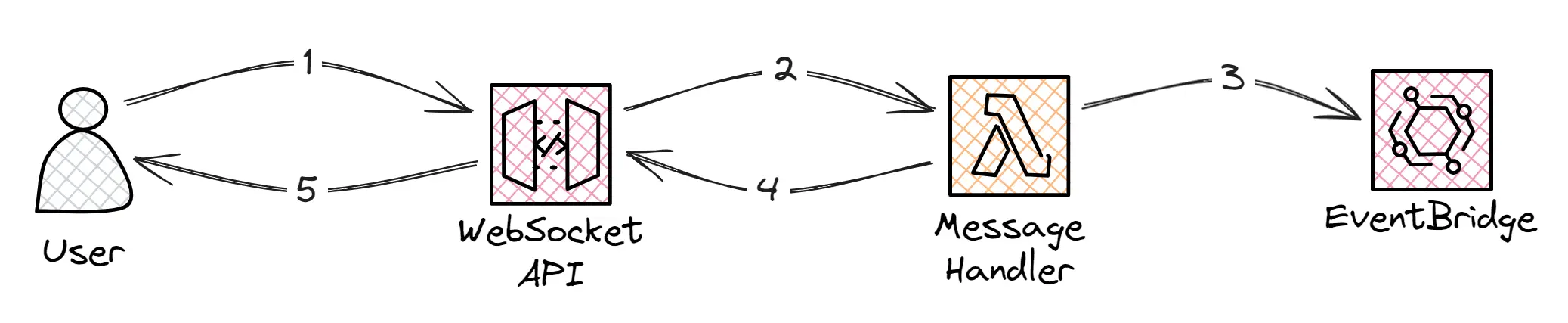

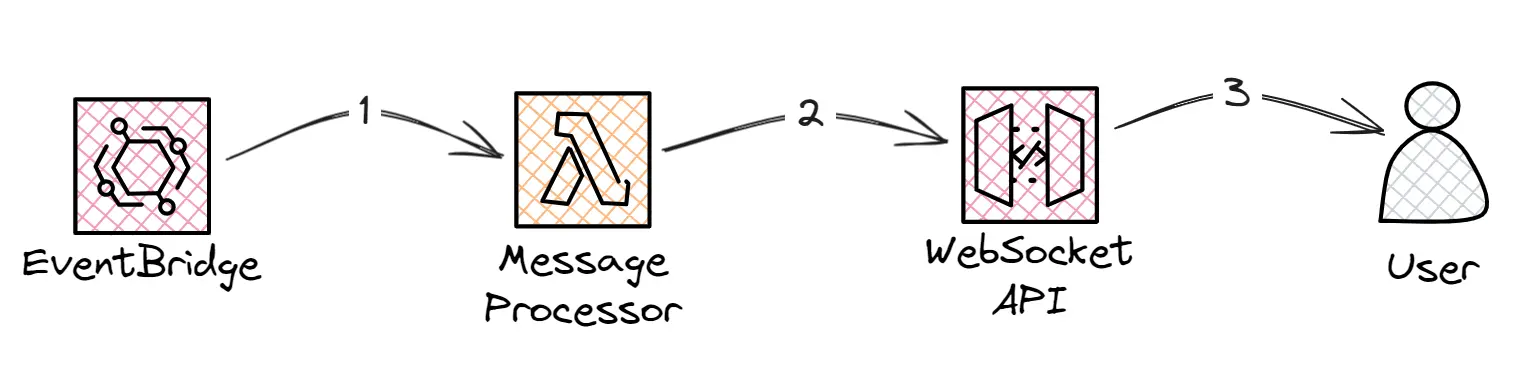

The main idea is to decouple the logic into two different flows.

The first flow, as shown in the image above, is the one limited by the 30-second timeout and is responsible for handling incoming messages. The Message Handler Lambda would process any validation logic required, trigger an event, and reply to the user stating that the message was received gracefully.

The second flow is completely decoupled and can take as long as needed being only limited by the maximum execution time of the lambda and the 10-minute idle timeout of WebSocket APIs. In this Flow, the Message Processor Lambda is triggered by the events generated by the previous flow, executes any processing logic, and takes advantage of the bi-directional communication capabilities to send as many messages as needed back to the user.

Authentication and State Management

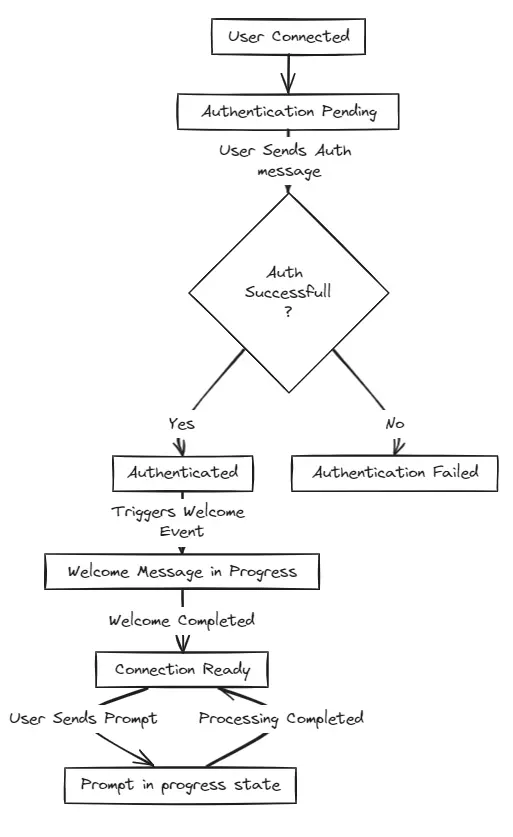

One of the main issues in choosing a WebSocket API is the need to implement the correct state management logic to ensure that the messages are being processed in the required order and that no one tries to exploit the API.

To handle the correct usage and authentication we implemented the following state machine:

The most relevant parts of the implemented state machine are:

No messages will be processed until the user sends a valid authentication message.

If authentication fails, the state will be updated as 'Authentication Failed', and no further action/message will be processed.

User prompts will be processed one at a time and only if the state is in 'Connection Ready' when the prompt is received.

Architecture Overview

After seeing the different challenges that we faced and how we resolved them, we are ready to jump straight into the final architecture.

As part of our final application, we developed two different APIs:

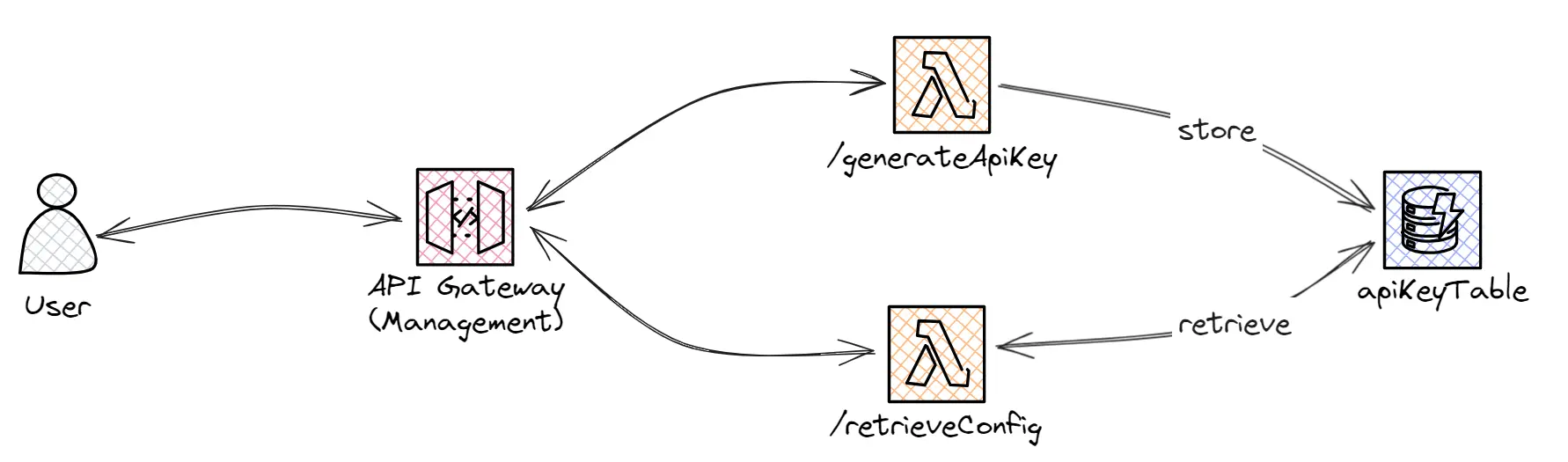

Management API

On one side we have the Management API, which is intended to handle part of the authorization logic.

As part of this REST API, we have two different endpoints:

'/generateApiKey': This endpoint, intended to only be used internally, handles the API Key generation. It can be seen as an API Key vending machine. As WebSocket APIs don’t support API Keys, we’ll store the identifier and associated usage limits on a DynamoDB table.

'/retrieveConfig/{configId}': This endpoint, used by the Front End application, is used to retrieve the API Key information necessary to send the authentication message as part of the WebSocket API.

WebSocket API

The “main course” would be the WebSocket API, the main API used to implement the Santa Chat application logic.

This API could be divided into the following sections:

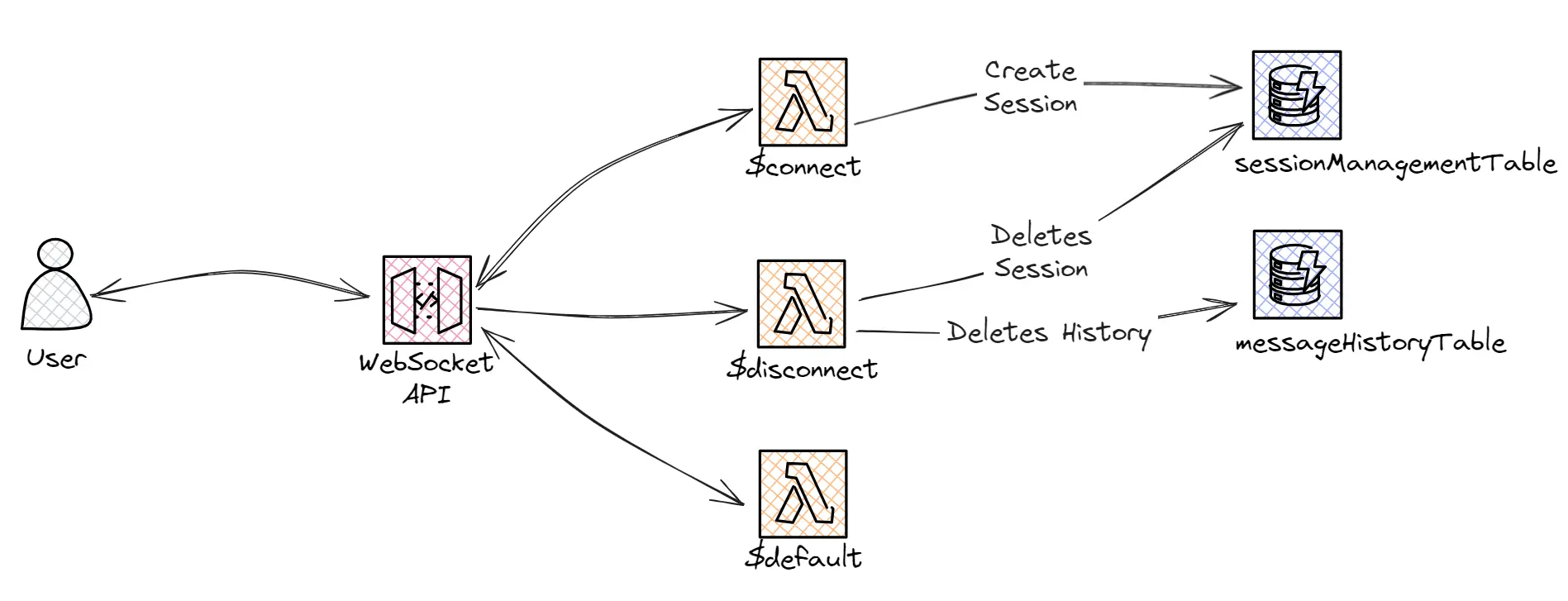

Default Actions

Default actions, or routes, would be the ones handling the default/required functionalities.

As part of this section, we have the following routes:

'$connect': This route is triggered for every connection request. For this route, we implemented some custom logic to create a new 'session' record for the received 'connectionId'. This newly created record is used to manage the different states and ensure we’re able to limit the usage as required.

'$disconnect': This route is triggered whenever a connection is closed. Here we implemented some clean-up logic to delete any stored information linked to the current connection.

'$default': This is the fall-back route, which is triggered when a route for the given action cannot be found. For our specific use case, triggering this route will always return an error message.

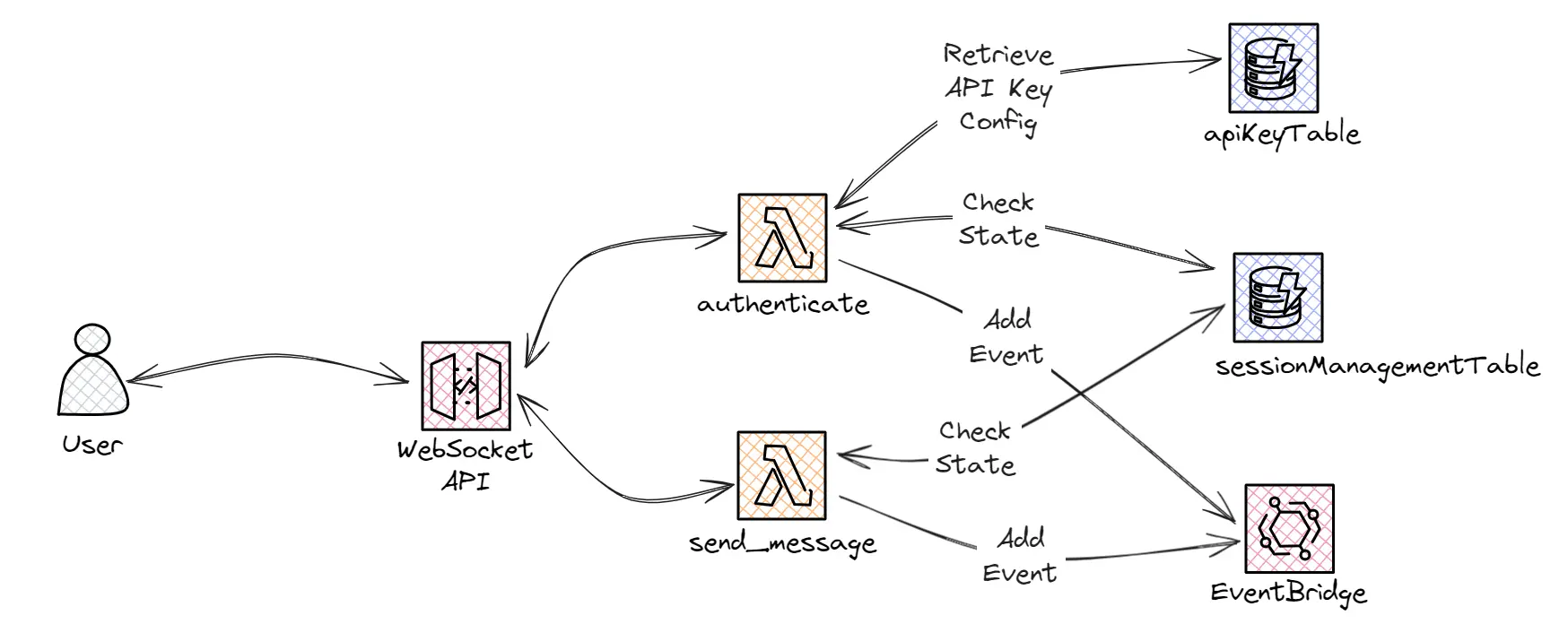

Custom Actions

As part of the default and required actions, we also implemented some custom routes/actions.

These new custom routes will handle the following logic:

'authenticate': This route will check that the provided API Key is valid and update the Session state accordingly. If the authentication is successful, a 'Authentication Completed' event will be added to the custom EventBridge bus. If it fails, no event will be triggered and the connection won’t accept any further messages.

'send_message': This route is the one responsible for handling any received user prompts. This Lambda will also ensure that the Session State is in the correct status and, if it is, it will add a 'Prompt Received' event to the custom EventBridge bus and set the new Session State.

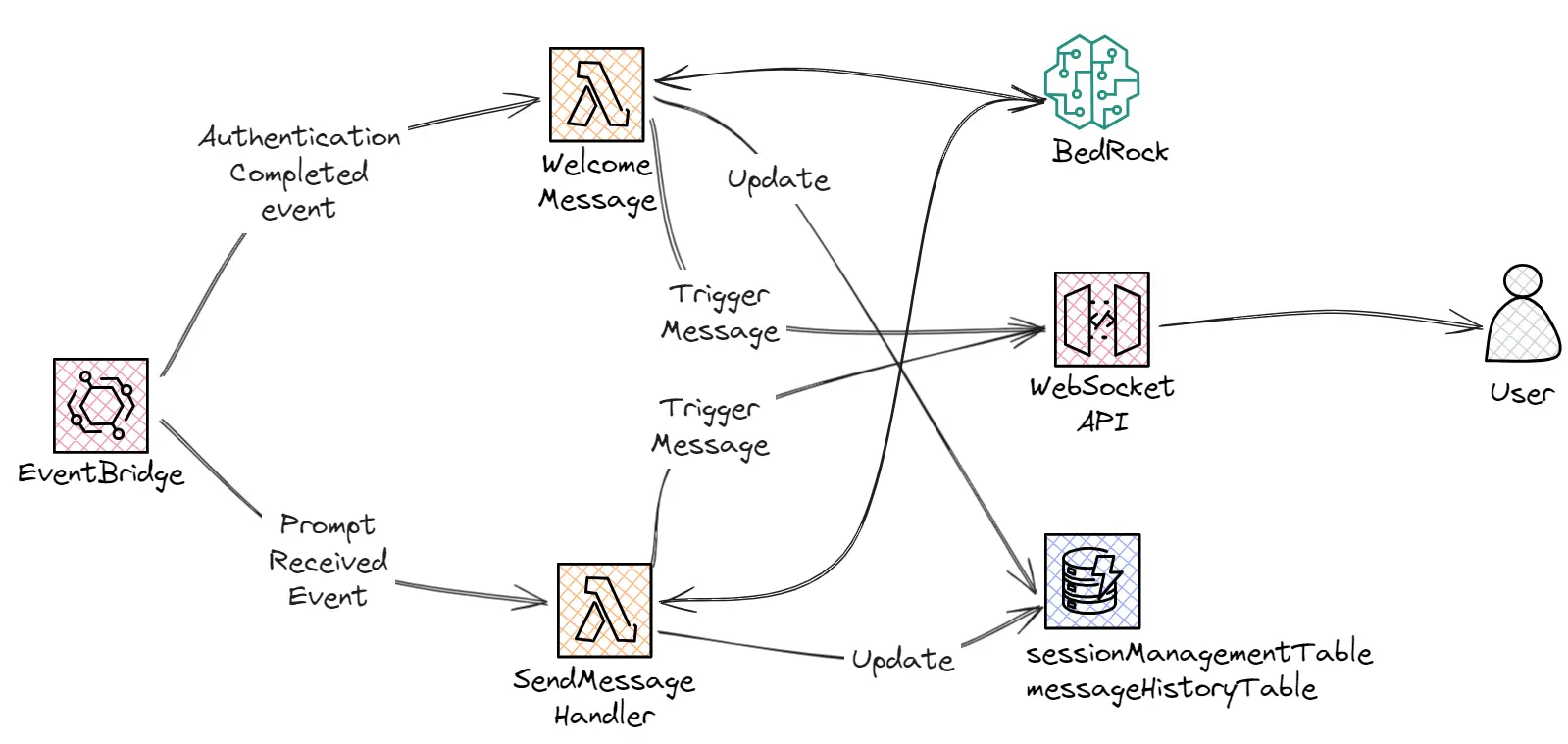

Decoupled Events

The magic sauce, decoupling the events, could be the most relevant part of the architecture.

By decoupling the processing/requests sent to AWS Bedrock to an asynchronous process we ensure that our API should never timeout.

We defined and implemented the following events:

'Authentication Completed': This event is triggered automatically when the authentication process is completed. The main objective of this event is to trigger an initial prompt to Bedrock, that will start the conversation by introducing itself as Santa and setting up the appropriate context.

'Prompt Received': This event is triggered every time we receive a prompt. The SendMessage Handler Lambda will be responsible for formatting and sending the prompt to Bedrock and sending the streamed response to the user.

Next Steps

As mentioned in the article, the approach taken was to develop an MVP with minimal features to showcase how a simple chat application could work.

There is an almost infinite amount of possible next steps and improvements that could be done, here are a few that we could think of:

Enable voice-to-text and text-to-voice transcription to take the application a step further and make it feel more like a real conversation and not just a chat.

Improve Authentication and allow for persistent message history.

Add any additional feature, such as implementing an Agent to allow the LLM to query another API to f.e.: List possible gift ideas or build a custom wishlist to send to Santa.

Conclusions

In conclusion, the process of building an AI chatbot, particularly one themed around Santa for our holiday hackathon, presented a number of challenges but also provided a rich learning experience.

From ensuring efficient handling of long-lasting executions to managing the state of WebSocket APIs, we navigated various complexities and came up with innovative solutions.

This project not only showcases the potential of Large Language Models (LLMs) in crafting engaging conversational experiences but also demonstrates the importance of a well-thought-out architecture in managing the unique challenges of real-time, interactive applications.

As we continue to explore the world of serverless applications and AI chatbots, we look forward to sharing more insights and learnings with our community.